Workflows and Viewing Results¶

This section will describe different parts of workflows for running, viewing detailed results, and exporting Jobs and Records.

Sub-sections include:

Record Versioning¶

In an effort to preserve various stages of a Record through harvest, possible multiple transformation, merges and sub-dividing, Combine takes the approach of copying the Record each time.

As outlined in the Data Model, Records are represented in both MySQL and ElasticSearch. Each time a Job is run, and a Record is duplicated, it gets a new row in MySQL, with the full XML of the Record duplicated. Records are associated with each other across Jobs by their Combine ID.

This approach has pros and cons:

- Pros

- simple data model, each version of a Record is stored separately

- each Record stage can be indexed and analyzed separately

- Jobs containing Records can be deleted without effecting up/downstream Records (they will vanish from the lineage)

- Cons

- duplication of data is potentially unnecessary if Record information has not changed

Running Jobs¶

Note: For all Jobs in Combine, confirm that an active Livy session is up and running before proceeding.

All Jobs are tied to, and initiated from, a Record Group. From the Record Group page, at the bottom you will find buttons for starting new jobs:

Once you work through initiating the Job, and configuring the optional parameters outlined below, you will be returned to the Record Group screen and presented with the following job lineage “graph” and a table showing all Jobs for the Record Group:

The graph at the top shows all Jobs for this Record Group, and their relationships to one another. The edges between nodes show how many Records were used as input for the target Job, and whether or not they were “all”, “valid”, or “invalid” Records.

This graph is zoomable and clickable. This graph is designed to provide some insight and context at a glance, but the table below is designed to be more functional.

The table shows all Jobs, with optional filters and a search box in the upper-right. The columns include:

Job ID- Numerical Job ID in CombineTimestamp- When the Job was startedName- Clickable name for Job that leads to Job details, optionally given one by user, or a default is generated. This is editable anytime.Organization- Clickable link to the Organization this Job falls underRecord Group- Clickable link to the Record Group this Job falls under (as this table is reused throughout Combine, it can sometimes contain Jobs from other Record Groups)Job Type- Harvest, Transform, Merge, or AnalysisLivy Status- This is the status of the Job in Livy

gone- Livy has been restarted or stopped, and no information about this Job is availableavailable- Livy reports the Job as complete and availablewaiting- The Job is queued behind others in Livyrunning- The Job is currently running in LivyFinished- Though Livy does the majority of the Job processing, this indicates the Job is finished in the context of CombineIs Valid- True/False, True if no validations were run or all Records passed validation, False if any Records failed any validationsPublishing- Buttons for Publishing or Unpublishing a JobElapsed- How long the Job has been running, or tookInput- All input Jobs used for this JobNotes- Optional notes field that can be filled out by User here, or in Job DetailsTotal Record Count- Total number of successfully processed RecordsMonitor- Links for more details about the Job. See Spark and Livy documentation for more information.Actions- Link for Job details

To move Jobs from one Record Group to another, select the Jobs from the table with their checkboxes and then in the table at the bottom, select the target Record Group and click “Move Selected Jobs”.

To delete Jobs, select Jobs via their checkboxes and click “Delete Selected Jobs” from the bottom management pane.

As Jobs can contain hundreds of thousands, even millions of rows, this process can take some time. Combine has background running tasks that handle some long running actions such as this, where using Spark is not appropriate. When a Job is deleted it will be grayed out and marked as “DELETED” while it is removed in the background.

Optional Parameters¶

When running any type of Job in Combine, you are presented with a section near the bottom for Optional Parameters for the job:

These options are split across various tabs, and include:

For the most part, a user is required to pre-configure these in the Configurations section, and then select which optional parameters to apply during runtime for Jobs.

Record Input Filters¶

When running a new Job, various filters can be applied to refine or limit the Records that will come from input Jobs selected. Currently, the following filters are supported:

Refine by Record Validity

Users can select if all, valid, or invalid Records should be included.

Below is an example of how those valves can be applied and utilized with Merge Jobs to select only only valid or invalid records:

Keep in mind, if multiple Validation Scenarios were run for a particular Job, it only requires failing one test, within one Validation Scenario, for the Record to be considered “invalid” as a whole.

Limit Number of Records

The simplest of the three, users can provide a number to limit the Records from each Job. For example, if 4 Jobs are selected as input, and a limit of 100 is entered, the resulting Job will have 400 Records: 4 x 100 = 400.

Refine by Mapped Fields

Users can provide an ElasticSearch DSL query, as JSON, to refine the records that will be used for this Job.

Take, for example, an input Job of 10,000 Records that has a field foo_bar, and 500 of those Records have the value baz for this field. If the following query is entered here, only the 500 Records that are returned from this query will be used for the Job:

{

"query":{

"match":{

"foo_bar":"baz"

}

}

}

This ability hints at the potential for taking the time to map fields in interesting and helpful ways, such that you can use those mapped fields to refine later Jobs by. ElasticSearch queries can be quite powerul and complex, and in theory, this filter will support any query used.

Field Mapping Configuration¶

Combine maps a Record’s original document – likely XML – to key/value pairs suitable for ElasticSearch with a library called XML2kvp. When running a new Job, users can provide parameters to the XML2kvp parser in the form of JSON.

Here’s an example of the default configurations:

{

"add_literals": {},

"concat_values_on_all_fields": false,

"concat_values_on_fields": {},

"copy_to": {},

"copy_to_regex": {},

"copy_value_to_regex": {},

"error_on_delims_collision": false,

"exclude_attributes": [],

"exclude_elements": [],

"include_all_attributes": false,

"include_attributes": [],

"node_delim": "_",

"ns_prefix_delim": "|",

"remove_copied_key": true,

"remove_copied_value": false,

"remove_ns_prefix": false,

"self_describing": false,

"skip_attribute_ns_declarations": true,

"skip_repeating_values": true,

"split_values_on_all_fields": false,

"split_values_on_fields": {}

}

Clicking the button “What do these configurations mean?” will provide information about each parameter, pulled form the XML2kvp JSON schema.

The default is a safe bet to run Jobs, but configurations can be saved, retrieved, updated, and deleted from this screen as well.

Additional, high level discussion about mapping and indexing metadata can also be found here.

Validation Tests¶

One of the most commonly used optional parameters would be what Validation Scenarios to apply for this Job. Validation Scenarios are pre-configured validations that will run for each Record in the Job. When viewing a Job’s or Record’s details, the result of each validation run will be shown.

The Validation Tests selection looks like this for a Job, with checkboxes for each pre-configured Validation Scenarios (additionally, checked if the Validation Scenario is marked to run by default):

Transform Identifier¶

When running a Job, users can optionally select a Record Identifier Transformation Scenario (RITS) that will modify the Record Identifier for each Record in the Job.

DPLA Bulk Data Compare¶

One somewhat experimental feature is the ability to compare the Record’s from a Job against a downloaded and indexed bulk data dump from DPLA. These DPLA bulk data downloads can be managed in Configurations here.

When running a Job, a user may optionally select what bulk data download to compare against:

Viewing Job Details¶

One of the most detail rich screens are the results and details from a Job run. This section outlines the major areas. This is often referred to as the “Job Details” page.

At the very top of an Job Details page, a user is presented with a “lineage” of input Jobs that relate to this Job:

Also in this area is a button “Job Notes” which will reveal a panel for reading / writing notes for this Job. These notes will also show up in the Record Group’s Jobs table.

Below that are tabs that organize the various parts of the Job Details page:

Records¶

This table shows all Records for this Job. It is sortable and searchable (though limited to what fields), and contains the following fields:

DB ID- Record’s Primary Key in MySQLCombine ID- identifier assigned to Record on creation, sticks with Record through all stages and JobsRecord ID- Record identifier that is acquired, or created, on Record creation, and is used for publishing downstream. This may be modified across Jobs, unlike theCombine ID.Originating OAI set- what OAI set this record was harvested as part ofUnique- True/False if theRecord IDis unique in this JobDocument- link to the Record’s raw, XML document, blank if errorError- explanation for error, if any, otherwise blankValidation Results- True/False if the Record passed all Validation Tests, True if none run for this Job

In many ways, this is the most direct and primary route to access Records from a Job.

Field Analysis¶

This tab provides a table of all indexed fields for this job, the nature of which is covered in more detail here:

Publish¶

This tab provides the means of publishing a single Job and its Records. This is covered in more detail in the Publishing section.

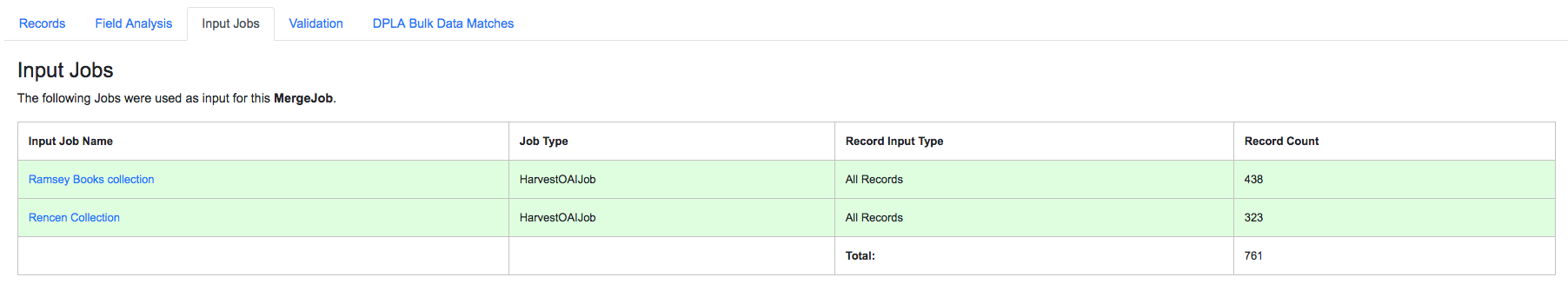

Input Jobs¶

This table shows all Jobs that were used as input Jobs for this Job.

Validation¶

This tab shows the results of all Validation tests run for this Job:

For each Validation Scenario run, the table shows the name, type, count of records that failed, and a link to see the failures in more detail.

More information about Validation Results can be found here.

DPLA Bulk Data Matches¶

If a DPLA bulk data download was selected to compare against for this Job, the results will be shown in this tab.

The following screenshot gives a sense of what this looks like for a Job containing about 250k records, that was compared against a DPLA bulk data download of comparable size:

This feature is still somewhat exploratory, but Combine provides an ideal environment and “moment in time” within the greater metadata aggregation ecosystem for this kind of comparison.

In this example, we are seeing that 185k Records were found in the DPLA data dump, and that 38k Records appear to be new. Without an example at hand, it is difficult to show, but it’s conceivable that by leaving Jobs in Combine, and then comparing against a later DPLA data dump, one would have the ability to confirm that all records do indeed show up in the DPLA data.

Spark Details¶

This tab provides helpful diagnostic information about the Job as run in in the background in Spark.

Spark Jobs/Tasks Run

Shows the actual tasks and stages as run by Spark. Due to how Spark runs, the names of these tasks may not be familiar or immediately obvious, but provide a window into the Job as it runs. This section also shows additioanl tasks that have been run for this Job such as re-indexing, or new validations.

Livy Statement Information

This section shows the raw JSON output from the Job as submitted to Apache Livy.

Job Type Details - Jobs¶

For each Job type – Harvest, Transform, Merge/Duplicate, and Analysis – the Job details screen provides a tab with information specific to that Job type.

All Jobs contain a section called Job Runtime Details that show all parameters used for the Job:

OAI Harvest Jobs

Shows what OAI endpoint was used for Harvest.

Static Harvest Jobs

No additional information at this time for Static Harvest Jobs.

Transform Jobs

The “Transform Details” tab shows Records that were transformed during the Job in some way. For some Transformation Scenarios, it might be assumed that all Records will be transformed, but others, may only target a few Records. This allows for viewing what Records were altered.

Clicking into a Record, and then clicking the “Transform Details” tab at the Record level, will show detailed changes for that Record (see below for more information).

Merge/Duplicate Jobs

No additional information at this time for Merge/Duplicate Jobs.

Analysis Jobs

No additional information at this time for Analysis Jobs.

Export¶

Records from Jobs may be exported in a variety of ways, read more about exporting here.

Record Documents

Exporting a Job as Documents takes the stored XML documents for each Record, distributes them across a user-defined number of files, exports as XML documents, and compiles them in an archive for easy downloading.

For example, 1000 records where a user selects 250 per file, for Job #42, would result in the following structure:

- archive.zip|tar

- j42/ # folder for Job

- part00000.xml # each XML file contains 250 records grouped under a root XML element <documents>

- part00001.xml

- part00002.xml

- part00003.xml

The following screenshot shows the actual result of a Job with 1,070 Records, exporting 50 per file, with a zip file and the resulting, unzipped structure:

Why export like this? Very large XML files can be problematic to work with, particularly for XML parsers that attempt to load the entire document into memory (which is most of them). Combine is naturally pre-disposed to think in terms of the parts and partitions with the Spark back-end, which makes for convenient writing of all Records from Job in smaller chunks. The size of the “chunk” can be set by specifying the XML Records per file input in the export form. Finally, .zip or .tar files for the resulting export are both supported.

When a Job is exported as Documents, this will send users to the Background Tasks screen where the task can be monitored and viewed.

Viewing Record Details¶

At the most granular level of Combine’s data model is the Record. This section will outline the various areas of the Record details page.

The table at the top of a Record details page provides identifier information:

Similar to a Job details page, the following tabs breakdown other major sections of this Record details.

Record XML¶

This tab provides a glimpse at the raw, XML for a Record:

Note also two buttons for this tab:

View Document in New TabThis will show the raw XML in a new browser tabSearch for Matching Documents: This will search all Records in Combine for other Records with an identical XML document

Indexed Fields¶

This tab provides a table of all indexed fields in ElasticSearch for this Record:

Notice in this table the columns DPLA Mapped Field and Map DPLA Field. Both of these columns pertain to a functionality in Combine that attempts to “link” a Record with the same record in the live DPLA site. It performs this action by querying the DPLA API (DPLA API credentials must be set in localsettings.py) based on mapped indexed fields. Though this area has potential for expansion, currently the most reliable and effective DPLA field to try and map is the isShownAt field.

The isShownAt field is the URL that all DPLA items require to send visitors back to the originating organization or institution’s page for that item. As such, it is also unique to each Record, and provides a handy way to “link” Records in Combine to items in DPLA. The difficult part is often figuring out which indexed field in Combine contains the URL.

Note: When this is applied to a single Record, that mapping is then applied to the Job as a whole. Viewing another Record from this Job will reflect the same mappings. These mappings can also be applied at the Job or Record level.

In the example above, the indexed field mods_location_url_@usage_primary has been mapped to the DPLA field isShownAt which provides a reliable linkage at the Record level.

Record Stages¶

This table show the various “stages” of a Record, which is effectively what Jobs the Record also exists in:

Records are connected by their Combine ID (combine_id). From this table, it is possible to jump to other, earlier “upstream” or later “downstream”, versions of the same Record.

Record Validation¶

This tab shows all Validation Tests that were run for this Job, and how this Record fared:

More information about Validation Results can be found here.

DPLA Link¶

When a mapping has been made to the DPLA isShownAt field from the Indexed Fields tab (or at the Job level), and if a DPLA API query is successful, a result will be shown here:

Results from the DPLA API are parsed and presented here, with the full API JSON response at the bottom (not pictured here). This can be useful for:

- confirming existence of a Record in DPLA

- easily retrieving detailed DPLA API metadata about the item

- confirming that changes and transformations are propagating as expected

Job Type Details - Records¶

For each Job type – Harvest, Transform, Merge/Duplicate, and Analysis – the Record details screen provides a tab with information specific to that Job type.

Harvest Jobs

No additional information at this time for Harvest Jobs.

Transform Jobs

This tab will show Transformation details specific to this Record.

The first section shows the Transformation Scenario used, including the transformation itself, and the “input” or “upsteram” Record that was used for the transformation:

Clicking the “Re-run Transformation on Input Record” button will send you to the Transformation Scenario preview page, with the Transformation Scenario and Input Record automatically selected.

Further down, is a detailed diff between the input and output document for this Record. In this minimal example, you can observe that Juvenile was changed to Youth in the Transformation, resulting in only a couple of isolated changes:

For transformations where the Record is largely re-written, the changes will be lengthier and more complex:

Users may also click the button “View Side-by-Side Changes” for a GitHub-esque, side-by-side diff of the Input Record and the Current Record (made possible by the sxsdiff library):

Merge/Duplicate Jobs

No additional information at this time for Merge/Duplicate Jobs.

Analysis Jobs

No additional information at this time for Analysis Jobs.